Embedding a Fusion Table map in a WordPress post

Just testing: a Google Fusion Table map embedded in a WordPress blog post.

Here’s the original Fusion Table table. That data is described in this Ottawa Citizen post by Glen McGregor.

Here’s the exact embed code used for the above map:

<iframe src=”http://www.google.com/fusiontables/embedviz?viz=MAP&q=select+col6+from+2621110+&h=false&lat=43.50124035195688&lng=-91.65871048624996&z=5&t=1&l=col6″ scrolling=”no” width=”100%” height=”400px”></iframe>

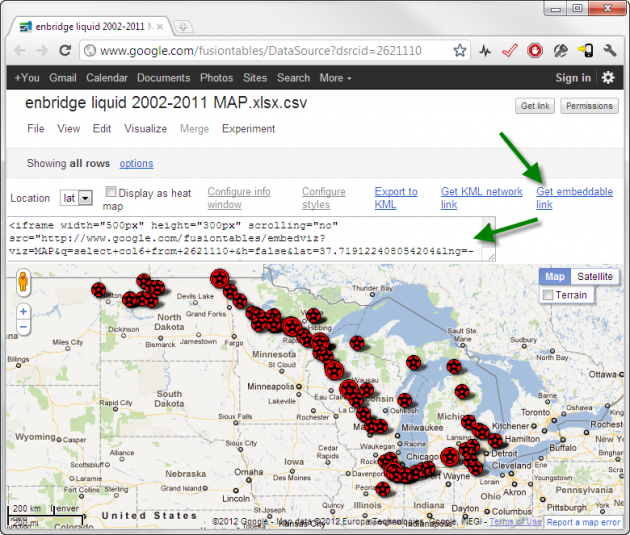

That’s just the default output from the Fusion Table map visualization, with the minor exception of tweaking the height and width a bit to fit my post. That code is available from within the Fusion Table visualization page if you click the “get embeddable link” link. Here’s a screenshot:

I’m posting this because someone was having trouble with this process and I wanted to try it out myself. I didn’t have to make any modifications to my existing WordPress 3.3.1 installation or theme to get it working.

Please note however that there is still a Conservative majority.

Update (later the same day): I’m pleased to see that Glen McGregor was able to embed the map on the the Edmonton Journal site, and wrote a telling article around it.