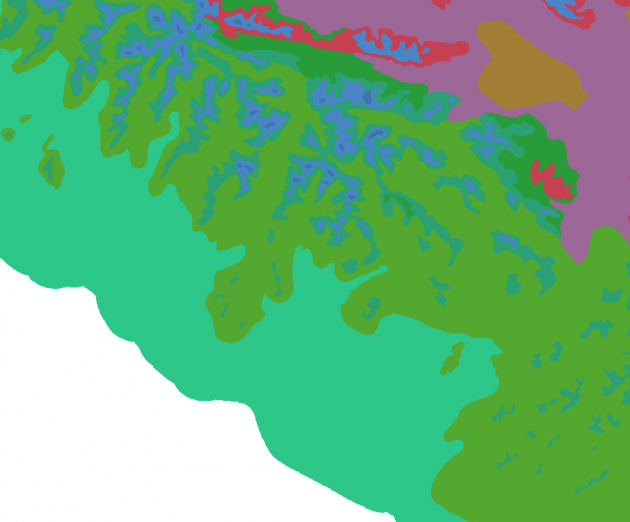

GIS Art from Landscape Biodiversity Project

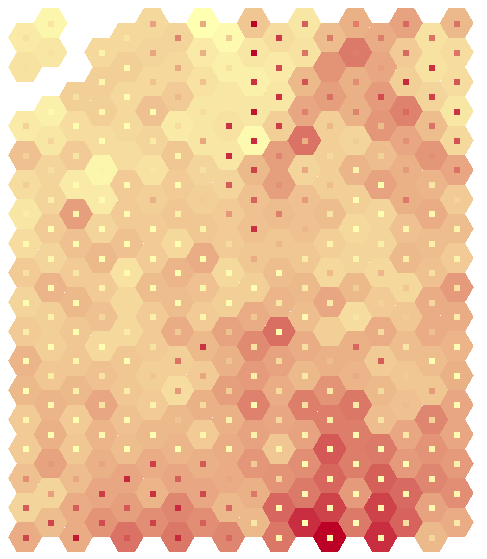

I’m working on a fun project for the BC Forest Practices Board. We’re taking great whackloads of province-wide spatial data and transmogrifying it into handy reports on the physical status of forests in different administrative and ecological zones. And I’m pleased to report that the project is beginning to produce some GIS art.

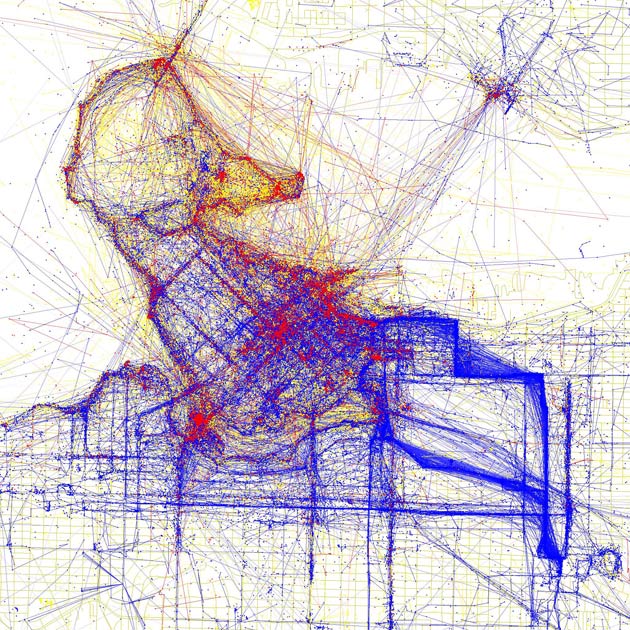

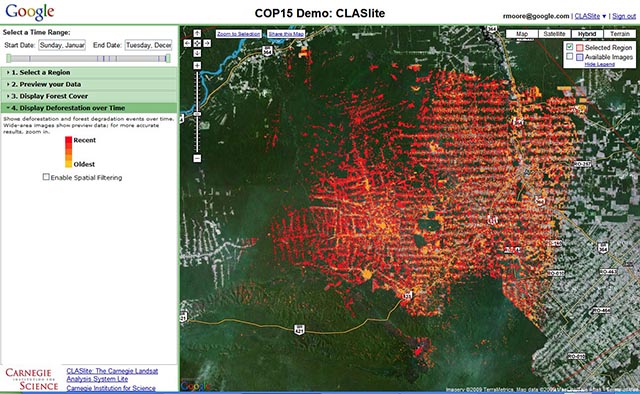

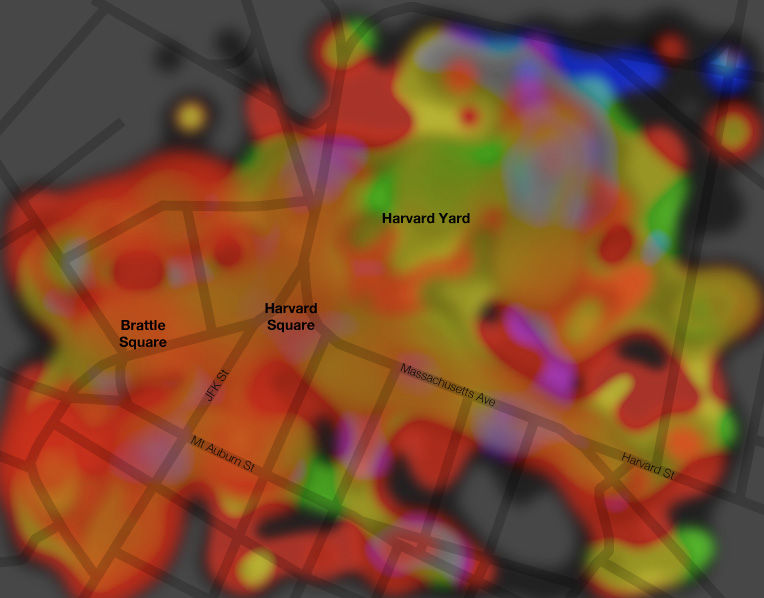

GIS art, for those who haven’t had the pleasure, are the serendipitous bits of aesthetic map flotsam that tend to pop up as intermediary products in geographic analysis chains. They’re the recombinant product of the natural attractiveness of landforms, the semi-random automated assignment of colours to landcover classes, and the quasi-organic distortions introduced by algorithm. The above is a relatively unprocessed version, see for comparison one of my old favourites:

localized explanatory power of soil water for shapeness

of juniper, Strawberry Crater, Waputki AZ

OK, so maybe it’s not great art. But when GIS art does show up, it’s often a nicely timed distraction from the more abstract “pleasures” of analytical troubleshooting.